An Interview with Sean Mitchell, Chief Commercial Officer of Ubotica

What is your role at Ubotica and how long have you been with the company?

My name is Sean Mitchell and I am the Chief Commercial Officer at Ubotica. I officially started this role in 2020, but I have been involved with the company since it was founded in 2016.

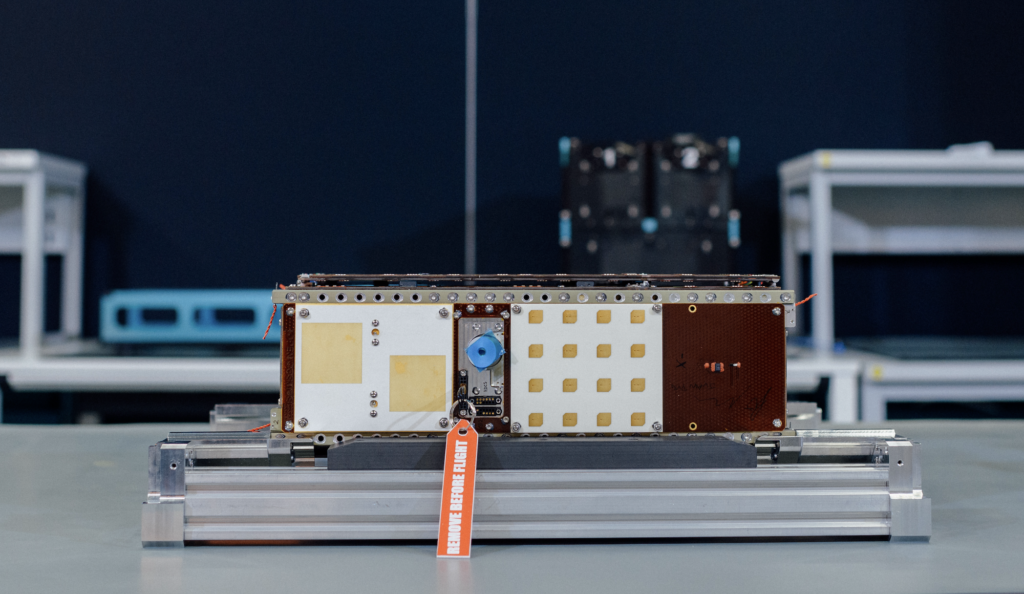

Now, Ubotica has launched its first satellite, CogniSAT-6, on a groundbreaking Live Earth Intelligence mission. Tell us about this exciting launch?

We have carried out a number of flights already to investigate the idea of having onboard artificial intelligence (AI) processing on satellites. In each of those cases, we have discovered a piece of the satellite architecture puzzle. Now, we have reached the stage when we want to have full control. We want to showcase our satellite technology to its fullest potential.

CogniSAT-6 will be one of the most connected and intelligent AI-powered Live Earth Intelligence missions that has ever been sent into space. It was launched onboard SpaceX’s Transporter 10 on the 4th March and will offer real-time, actionable decision-making. This is a real breakthrough. While it is great to be able to analyse a picture immediately, if you have to wait an hour before you can tell anyone about it back on Earth, the data will have limited value.

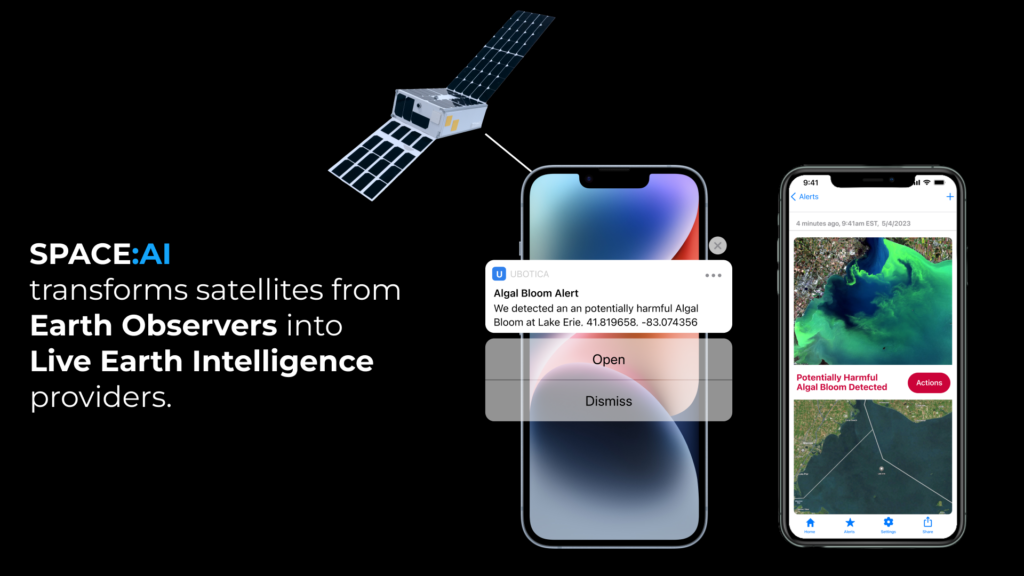

Combining onboard AI processing with a reliable, persistent communication path to the end user is really powerful. It enables users on Earth to access Live Earth Intelligence insights within five minutes of the picture being taken from space. The information can be made available in real-time, which is very important.

SpaceX Transporter 10 (image courtesy of SpaceX)

Ubotica has been working with a number of partners to make this happen. Who has been involved in the project?

First of all, we’re partnered with Open Cosmos, who manufactured and operate the satellite. They have put together all the different system components, including the hyperspectral imager. Open Cosmos constructed the full satellite chassis, solar panels and all the other elements.

Open Cosmos will manage the satellite’s daily operations. Ubotica will handle certain orbits, choosing where images are captured and generating insights from those images. These insights will be delivered to end users through our Live Earth Intelligence services. Open Cosmos will oversee the remaining orbits, determining where to capture images during those periods. They will also manage how the data is transmitted back to Earth via their Open Constellation system, which involves data sharing across multiple satellites, including CogniSAT-6.

Another funding partner in this mission is the UK Catapult. They have a particular interest in funding the imaging technology that’s flying onboard the satellite, which is a hyperspectral sensor. The unique thing about hyperspectral sensors is that not only do they image things, but they can also understand chemical compositions that are not obvious to the human eye. They can look at things like soil moisture, vegetation indexes and harmful algal blooms, for example. This will greatly enhance the quality of data that CogniSAT-6 will be able to access.

Finally, Exolaunch and SpaceX were involved in the launch. The CogniSAT-6 mission was launched onboard SpaceX’s Transporter 10 and Exolaunch provided the dispenser which ejects the satellite once it is up at its orbit.

What are the primary objectives of CogniSAT-6?

We have a number of use cases that we are prioritising in this project. For example, our cloud-removal and compression imaging technology is being used in the satellite’s onboard AI solution. This product analyses images, removes those with excessive cloud cover, and compresses the remaining data for transmission back to Earth. This process means that we can reduce data by around six to ten times and avoid sending multiple images that are too cloudy or obscured to be useful. This leads to cost savings in the data management, as well as increased utilisation of the satellite asset because you receive only useful images from it.

The next use case is Live Earth intelligence. This is the ability to take a picture and have the insights extracted from it onboard and sent back down on Earth within five minutes. This enables you to tell someone in real-time that you have spotted a ship in an image, for example. Another example is to have identified an algal bloom – whatever the application might be that’s of most interest in that geographical area at that particular time.

Then, we are excited to see some automated decision-making happening on the satellite, which is very unique. In other words, having onboard intelligence now means that we can not only analyse acquired images, but we can also draw further conclusions from it, such as where to direct the satellite to take further pictures. We can use that automated decision-making capability to inform future tasking of the satellite, or to inform another trailing satellite in a process known as tipping and queuing.

People are saying that CogniSAT-6 will be one of the most connected, intelligent satellites ever sent up into space. Would you agree with that?

Yes, I think it is fair to say it will be among the most intelligent satellites sent into space. Its main advantage in that respect is having the onboard AI capability, combined with the persistent communications, which can also be bidirectional.

In other words, a user on the ground will be communicate back to the satellite, which will be the first of its kind to communicate via mobile app. Our mobile phone applications will enable users to receive alerts from the satellite and view information about whatever their application of interest is. This will also provide them with a path back to the satellite to send a message or command back, based on what they have seen, to further task the satellite to look at more detail or to move to another location.

Once CogniSAT-6 is in space, you will be able to make some changes to accommodate customers’ requirements?

The important thing to note about the onboard AI processing capability is that it’s fundamentally a programmable platform. It’s not dedicated to a single application, which opens up the whole ‘app store’ concept. Where we see Live Earth Intelligence going is that as the satellite passes over different areas of the Earth, we can set different applications in motion, depending on what the sensors are seeing at the time. Even during the course of a single orbit.

So, for example, if the satellite is flying over land there’s not much point in directing it to look for ships! It becomes a much more customised, focused way of using applications to solve the problems that are relevant to the particular geographical point on the Earth that the satellite is passing over at any one time. It’s a very powerful way to think about the whole Earth Observation industry in a new way. Rather than capturing data in a very broad sense, we will be able to really zoom in and solve customers’ problems in a much more customised fashion, in real time, all over the world.

How long do you think the satellite will stay up in space?

The orbital design of the satellite is for in excess of a three-year lifetime. So, we expect to carry on the operation for three years, together with Open Cosmos. Ubotica will control multiple orbits each day for this satellite, so we can have full access to the tasking and outputs that are generated. We can showcase new applications as they arise. It’s a full commercial satellite, not a demonstrator platform.

What types of applications can your customers from different sectors use this technology for?

The maritime sector can use our products to help monitor vessels, as well as spot security issues, such as illegal fishing and environmental problems like oil spills. CogniSAT-6 can also detect ships operating in a designated area of interest. It can then autonomously task following satellites to swarm and conduct deeper analysis into those ships. This is of particular importance for narcotics interdiction or other forms of trafficking.

Another area where this technology will help is in identifying areas where natural disasters are unfolding, or are about to occur. This is where the real-time aspect of our imaging communications is so important due to the urgency of some situations. Real-time alerts are incredibly valuable as they guide users to additional data sources, helping them efficiently mobilise resources and target the right locations.

It’s fascinating to see how real-time alerts can address less obvious issues, such as illegal logging. Illegal logging can cause huge damage with the heavy machinery involved. Our data enables a much quicker response, reducing impact more effectively than daily updates can. Illegal loggers are currently aware of the response times of traditional satellite monitoring, allowing them to exploit this knowledge and operate undetected for a full day.

Precision agriculture offers significant benefits for farmers. Access to real-time data on crop health can enhance irrigation and fertilisation strategies, leading to higher yields. Additionally, the technology can monitor gas pipelines and power lines to quickly identify issues such as methane leaks. Ground-based monitoring of extensive areas and pipelines is challenging and costly. Early detection and intervention are crucial to avoid the potentially enormous costs of undetected leaks.

Could the technology also be used in cases where there has been a plane crash or a collision at sea and you want to get images to the emergency services quickly, for example?

Yes, it could. We have one application that we’re working on where the satellite can pass over a disaster zone and actually update the map as it flies. So, emergency first responders can follow the path of a forest fire for example. Another example is they can see how a road network has been affected by a disaster such as a flood or earthquake. Having that kind of information can reduce delays and help emergency services reach their emergency on time.

Geolocation is one of the key elements that we are building on in our imaging technology. If you take a picture of something and you’re saying there’s something of interest in it, you must also be able to say where it is to a certain degree of accuracy. We also want to make our applications as familiar and as easy to use as possible. Just like a mobile app on your phone, where you don’t need to know anything about space data to use it. You’re just being told the information you need to know. And then this information can also plug into the established data systems and workflows of the customers.

How will the CogniSAT-6 mission help Ubotica with its growth plans?

We have spent many years proving that onboard AI technology can work on other people’s satellites. Being able to showcase what our products can do is great. Now that we have CogniSAT-6, we can show the service really coming to life. We can explore the potential of having real-time Live Earth intelligence on a handset. Then find out how it can work as a new way of consuming space intelligence and data.

We believe that it is a big step forward in terms of realising the massive potential of Earth observation. Which, until now has been disjointed and difficult to manage. Now, with our real-time capabilities and powerful imaging technology, we can get truly customised services through to end users to help them solve their problems on the ground quickly and effectively.